Data Compression - Prefix Free Code and Length Optimisation (ICT Part 2)

Data Compression - Prefix Free Code and Length Optimisation

In my last post I arrived at the requirement for a prefix-free code . Now, I will discuss how we can obtain prefix-free codes and then elucidate on length optimisation for data compression.

The naive way to check whether a code is prefix-free would be to brute force through each and check whether such that .

We want to make this check faster; one way to do this is to use Kraft's inequality. The theorem is stated as follows:

Kraft's Inequality

Given and length of , if is prefix-free then

Proof: We have a structured notation, we assume an ordering of the s []; however, the summation formula in the kraft's inequality works even without such ordering. Using this inequality we get that is the maximum length of the codewords mapped from and using the we have:

Suppose we have a prefix-free code ,

- Construct a binary tree of height .

- Label the nodes as follows:

- The root is labeled as

- Nodes at height (left child first, then the right child) get respectively.

- Whenever a node has a label , the left child of that node gets & the right child gets the label .

- At height some node with label as (the number of nodes in this layer are , all strings in are contained in this layer; same follows for all ), we will call this node . Now, take the subtree root at the node labeled as ; no for should be a label of one of the nodes in this subtree. The number of leaf nodes of this subtree are (since we now have a binary tree with height ).

- All subtrees with roots as have to be disjoint as is prefix-free.

- The total number of leaves of theses subtrees put together , since is the maximum number of nodes in the entire tree.

Hence, we have proved that if is prefix-free then .

Length for Data Compression

Up till now I have just discussed how to form codes that are decodeable. Now, I will elucidate on optimising the length for codewords.

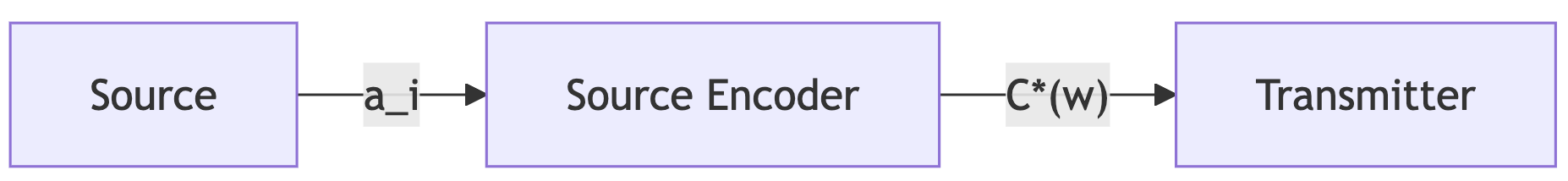

The source input is the set . The source encoder outputs , and is formed by appending these outputs.

Let .

Eg. if , then and . We want a encoding function such that this is minimum for maxmimum number of source data .

Solving this problem won't be as simple as selecting a such that for one specific we have as we can have some such that .

We want to minimise the codeword length for a given source distribution (a correct way to describe a distribution is with probabilities, which I will just get to).

Probability Distribution for

Define: such that

Eg. we have and for a given source is obtained over a large sampling of inputs as (the probability of the source sending an to be transmitted):

Using we obtain a model of the source distribution as .

If then we get

Now for a given , using the values we are able to calculate the expected length of a codeword retrieved from the source distribution as:

Hence, given a source distribution , to minimise the codeword length over the entire domain we need to choose a such that is minimised and ( should remain prefix-free so that the codewords are efficiently decodable).